In K&R, we have an exercise 2-1. It's stated like this

Write a program to determine the ranges of char, short, int, and long variables, both signed and unsigned, by printing appropriate values from standard headers and by direct computation. Harder if you compute them: determine the ranges of the various floating-point types.

This exercise is only part of K&R 2nd edition, which uses C89 (ANSI C).

This exercise forced me to learn a fair bit about the representation of floating point numbers. Really, I should know this stuff already, but the last time that I learned it was when I was probably under 18 years old -- more than 20 years ago; and back then, I didn't enough knowledge to understand it. At the time, it was taught to me in a very first-principles way. I don't say this is the wrong way to teach, but at that time it didn't work for me.

I actually got this information from a fun visualization on YouTube, so there are a few remaining perks to living in 2025.

There are 3 main components to floating point representation, which are all

sub-components of a single type float. These are standardized under IEEE 754.

- A sign bit (size 1).

- An exponent (size 8).

- A mantissa (size 23).

To 'decode' a number, you essentially multiply out all the components, according to a specific formula.

SIGN * MANTISSA * EXPONENT

The strategy is basically analogous to scientific notation. Each part needs to

be decoded separately. The mantissa essentially encodes a repeatedly smaller

fractional value, implicitly starting with 1., getting closer and closer to 2

at its maximum value. E.g. if there was a mantissa with a size of 1, it could

either encode 1 or 1.5. If the mantissa had size 2, it could have values 1,

1.5, or 1.75.

The exponent is encoded as a binary integer, but with a few quirks. A 'bias' is

used instead of a sign bit. For instance, imagine an exponent of size 8. This

exponent would have 256 possible values (0-255). However a bias of 127 is used.

So subtracting the bias (255 - 127), we get 128 as the highest value. However

we know (from reading the spec... right?) that the top exponent value is

reserved to represent infinity. So the actual maximum is therefore 127. By

the same token, the lowest value is also reserved to represent NaN. So the

lowest value is therefore 0 - 127 + 1 = -126.

Apparently a side effect of this strategy is that precision gets worse as you approach the end of the range, but precision is better within the lower values. This does seem like a reasonable trade off for me. However I do intuitively feel that fixed-point seems like a better approach for most problems that I actually encounter in computing.

Note that the exercise has a big problem which is that the details of floating

point are actually not defined in C89. This is where language-lawyering the

wording of the question gets a bit murky -- from the most abstract perspective,

a float is just a method of storing a real number. You can't just impute a

mantissa/exponent structure to it. However, perhaps the intended reading is for

the word float itself to correspond directly to said representation, which is

assumed-background-knowledge for the reader? We can't read K&R's minds, unfortunately.

There are several things to note: using these facts about the representation

requires the ability to compute powers. Luckily, K&R have already introduced a

power routine, so we just need to rewrite this to work on floats. However, the

journeyman programmer might wonder, won't this suffer from the notorious

precision issues? Luckily, because multiplying by 2 operates directly on the

exponent and doesn't touch the mantissa at all, it's always precise. This means

that you can compute everything using the type in question (float or

double).

The other thing to note is that while FLT_MAX is a valid comparison here,

FLT_MIN is actually not that relevant. At least colloquially, when we talk

about the range of a value, we mean its full range from the maximally-negative

to the maximally-positive. So, strictly the endpoints would be -inf and

+inf. However FLT_MIN is not actually the maximally-negative value; that

would be -FLT_MAX.

The solution to the exercise that I eventually settled on uses a direct encoding of the representation rules for IEEE 754 floats: ex2-1.c.

However my intuitive sense of the intended solution to this problem suggests that it shouldn't rely on any knowledge of the internal structure. The closest that I could get to this was patterned on a suggestion from a Reddit user.

#include <float.h>

#include <stdio.h>

float get_stage1_max(void) {

float f = 1;

float prev;

while ((f * 2) != f) {

prev = f;

f *= 2;

}

return prev;

}

float calculate_by_approach(float start_point) {

float increment = start_point;

float f = start_point;

float prev = f;

while (1) {

increment /= 2;

if ((f + increment) == f)

break;

prev = f;

f += increment;

}

return prev;

}

int main(void) {

float result = calculate_by_approach(get_stage1_max());

printf("Stage 2 max: %g\n", result);

if (result == FLT_MAX) {

printf("This is the largest float.\n");

} else {

printf("This is not the largest float.\n");

}

}

This calculates the value in 2 stages: first it will calculate the largest float value that's reachable by doubling. Then it will repeatedly add smaller values, dividing by 2 until infinity is reached, and give the previous value before that happens. Realistically this does rely on a certain amount of assumptions about the behaviour of float arithmetic and therefore (by implication) of the internal structure of a float, but I believe that this is the closest one can get to a fully 'abstract' solution; happy to be corrected, though.

This exercise really illustrates a characteristic of K&R: the fact that they can forcibly-entail so much complexity from just this tiny statement, just an appendage to an exercise.

I've visted Cambridge recently and visited some nice pubs. The Blue Moon: funky, vibey place with a nice beer garden. The Cambridge Blue: lovely bar staff, absolutely rammed in the beer garden when I went there, has a very nice atmosphere though, and a huge bottle shop. The Devonshire Arms: loved this place, a kind of slightly anarcho/left vibe in the clientele, ramshackle in design and with a nice, open layout, super friendly bar staff.

Also visited The Baron of Beer, notorious for its fight between Clive Sinclair and the Acorn guy in Micro Men, but I'm almost 100% sure that the scene wasn't actually filmed there, the outside is pictured in the film though. The Maypole was also a generally pleasant affair.

I have a short gap in my reading; here are some options:

- Kafka diaries

- Kristeva - Revolution in Poetic Language

- The Politics of Time

- Richard Norris - Strange Things are Happening

- Simon Reynolds latest

- Emma Dowland - Care Crisis

- Abraham & Torok - Shell & the Kernel

- Milan Kundera (Misc)

- David Graeber - Debt

- Tomorrow and Tomorrow

- E. Rashkin - Psychoanalysis of Narrative

- Ferenczi - Thalassa

- Michel Foucault - History of Sexuality Vol 1

- Leader - What is Madness

I am reading (well, have now finished) L'etranger by Albert Camus. It's made significantly easier by the fact that I can look words up on my ereader. However, the ereader doesn't allow looking up verb conjugations, meaning that automatic lookups are mostly limited to the infinitive form. As the infinitive doesn't occur very often, this means that lots of manual lookups happen.

Most of the sentences seem to be written in the 'passé composé' with the verb 'avoir'. eg j'ai mangé = I ate. I initially mentally translate this as the English present perfect -- "I have eaten". I then need to mentally reshape it into the English simple past "I ate".

Some of the hardest parts:

Words meaning when -- alors, quand, lorsque. I don't have a clue what the rule is for these if there is any, and they all seem to have a bunch of different other meanings.

Words meaning 'still' -- toujours, encore, quand meme. I don't get the rule for this.

The word plus -- Such a simple word and seemingly with only one concrete meaning yet it's deployed in thousands of different shades of meaning, and a surprising number of the usages are not particularly obvious. I think I basically never recognize the negative variation ne...plus, "not any more".

Irregular past participles -- I basically have to remember all of these because I don't write them down. Luckily there aren't that many of them, but some of them are a pain -- particularly the irregular verbs with voir endings have past participles that often seem bizarre.

Conjugations of être -- The fact that être conjugates into forms that start with s- and forms that start with e- is a never-ending source of bewilderment. It helps to remember that the verb is formed from mashing together the Latin verbs forms esse and stare. The future tense of être is particularly confusing.

Various meanings for sentence with on as the subject -- I haven't quite grasped how to deal with this because it seems like there are maybe 3 different forms of this? One is the "us" meaning, one is an impersonal pronoun (like English 'one'), and I feel like there's one other form. Having reread about this now, the confusion makes sense, as we don't have quite such an ambiguous pronoun in English.

The ne...que construction meaning only -- this normally causes a big double take, if I can even recognize it at all, and I misread all of these sentences until I learned about it.

Multi-word phrases (idioms) are very difficult. These are used all over the place and they can't be looked up in the dictionary. There's really no choice other than rote memorization of these.

I created an Anki deck from all of the vocabulary that I wrote down, this has every card tagged with parts of speech, and with limited English translations based on the usage in L'etranger.

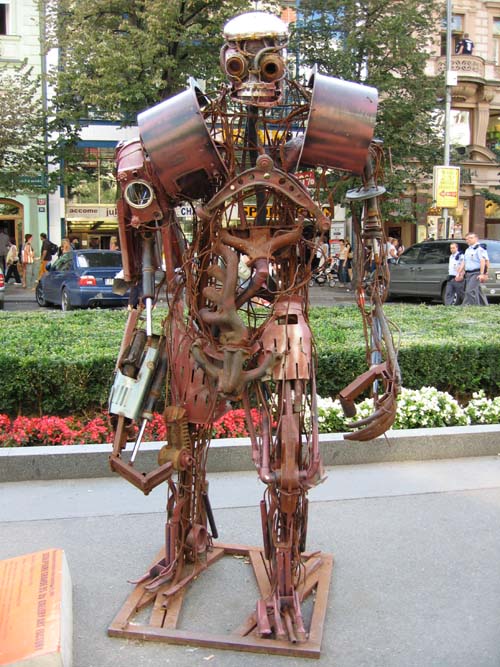

Stepan Tesar, Czech Republic - "Robot" 2.4x1x1 meters, iron, welded scrap. Robot machine is presented as human friend - helper or visitor, who wanders through the Wenceslas Square. The sculpture tries to bridge the barriers between people and artificial intelligence and integrate such 'modern slaves' in the society.

Photo by Courtney Powell

[!meta title="Obholzer"]]

Obholzer's book has some interesting aspects to it.

- Debian's grub package now invents the new flag

GRUB_DISABLE_OS_PROBERwhich is defaulted to true if not present in/etc/default/grub. I needed to explicitly set it to false to get my dual-boot setup to work. This is apparently an upstream changee346414725a70e5c74ee87ca14e580c66f517666. - Puppet was upgraded to puppetserver which is Clojure-based. This actually

worked fairly easily; fair play to Puppetlabs for seemingly being pretty

serious about their compatibility story. There were only two things to care

about really. The

puppetlabs-apache

module had a bug which I needed to backport, and the path where agent reports

were stored had changed from

/var/cache/puppet/reportsto/var/lib/puppetserver/reports.

When upgrading, there was an issue with PHP. I was required to remove existing PHP extensions that had been installed for 7.4, and replace them with the 8.2 versions.

Encountered bug 1000263. I solved most of these issues by installing the explicitly versioned PHP extension package from the archive. e.g., in my Puppet manifests, I previously had the following:

package { "php-xml": status => installed }

I changed this to:

package { "php${version}-xml": status => installed }

where $version is the version parameter. I am not totally sure why this

succeeds. The exception is gettext for which I had to use php-php-gettext.

Conky issue 1443 -- this caused conky to die completely until I added a config workaround.

After the upgrade I suggest completely removing all emacs packages (use apt-get,

not aptitude) and clear out the contents of /usr/share/emacs/site-lisp/elpa.

My theory is that Debian packages ship elisp source which then gets compiled

into this directory by maintainer scripts, but it can go stale and should be

removed. This is true but you actually need to reinstall all packages you use

that touch emacs which is rather hard. debian byte-compiles elisp in the

maintainer scripts.

Silver is a fun game that's slightly hamstrung by its awkward control system. It's marketed as an RPG but is actually a strange cross-genre mix. Its kindred spirits is hack-n-slash games like Diablo, plus a bit of real-time strategy (yes, really). The mouse control is cool, but awkward. It's very cool to be able to use the mouse gestures to adjust to situational combat, but in reality this is hardly necessary: you can easily get by just button-mashing, and the game doesn't reward using the mouse mechanics enough. Moreover, it's impossible to focus on fancy mouse techniques because your party is constantly being bombarded by group attacks. It's way too easy to select the wrong character and accidentally cancel your strategy. I frequently ended up with the wrong stuff equipped; switching between magic and melee strategies is similarly difficult.

Overall the game is solid, there's nothing wrong with it (except for a few bugs in the port), but it lacks any really spectacular moments. The voice acting is excellent. The story and worldbuilding are OK. The difficulty level is easy to medium; it would benefit from some more challenge in places, but you can't make it significantly harder without fixing the control system. e.g. I didn't block the entire game, until the very final fight which requires blocking.

The game is also hamstrung by the fact that the charcter models are actually rather nice, if blocky and FF7-ish, but you can barely see them most of the time because the view is so zoomed out. Your character is always extremely tiny.

Notes

- Switch all your magic to L2/L1 because L3 drains MP reserves too fast.

- All characters have reserves of all special moves, when they have melee weapons equipped.

- Once you have heal magic, you can heal using this instead of using food. Food becomes nearly useless about half way through the game.

- There are some bugs in the Steam version with using potions. Your character ends up with the potion equipped and you can't change weapons or attack.

It's no secret to anyone that knows me: I bloody hate OAuth2. (I specifically say 2 because OAuth was a radically different beast.) I recently had occasion to use the Pocket API. I have very mixed feelings about this service, but I have paid for it before (for quite some time). Now I am trying to use the Kobo integration which syncs articles from Pocket. This seems a much better solution than Send to Kindle which I was previously using. However, to use it in practicality I had to somehow archive 5000+ links which I had imported into it, my ~10 year browser bookmark history.

I tried to use ChatGPT to generate this code, and it got something that looked very close but was in practicality useless. It was faster for me to write the code from scratch than to debug the famous LLM's attempt. So maybe don't retire your keyboard hands just yet, console jockeys.

import requests

from flask import Flask, redirect, session

import pdb

app = Flask(__name__)

app.secret_key = 'nonesuch'

CONSUMER_KEY = 'MYCONSUMERKEY'

REDIRECT_URI = 'http://localhost:5000/callback'

BATCH_SIZE = 1000 # max 5000

@app.route("/test")

def test():

print("foo")

resp = requests.post('https://getpocket.com/v3/oauth/request', json={

'consumer_key': CONSUMER_KEY,

'redirect_uri': REDIRECT_URI,

'state': 'nonesuch',

}, headers={'X-Accept': 'application/json'})

data = resp.json()

request_token = data['code']

session['request_token'] = request_token

uri = f'https://getpocket.com/auth/authorize?request_token={request_token}&redirect_uri={REDIRECT_URI}'

return redirect(uri)

@app.route("/callback")

def callback():

print("using request token", session['request_token'])

resp = requests.post(

'https://getpocket.com/v3/oauth/authorize',

json={

'consumer_key': CONSUMER_KEY,

'code': session['request_token']

},

headers={'X-Accept': 'application/json'}

)

print("Status code for authorize was", resp.status_code)

print(resp.headers)

result = resp.json()

print(result)

access_token = result['access_token']

print("Access token is", access_token)

resp = requests.post(

'https://getpocket.com/v3/get',

json={

'consumer_key': CONSUMER_KEY,

'access_token': access_token,

'state': 'unread',

'sort': 'oldest',

'detailType': 'simple',

'count': BATCH_SIZE,

}

)

x = resp.json()

actions = []

for y in x['list'].keys():

actions.append({'action': 'archive', 'item_id': y})

print("Sending", len(actions), "actions")

resp = requests.post(

'https://getpocket.com/v3/send',

json={

'actions': actions,

'access_token': access_token,

'consumer_key': CONSUMER_KEY

}

)

print(resp.text)

return f"<p>Access token is {access_token}</p>"

I believe it's mandatory to make this an actual web app, hence the use of Flask. I hate OAuth2. The Pocket implementation of OAuth2 is subtly quirky (what a freakin' surprise). Also, this API is pretty strange, it doesn't even make any attempt at being RESTful, though the operation batching is rather nifty. It's rather pleasant that you can work in batches of 1000 items at a time, though. I expected a lower limit. If I cranked the batch size up to 5000 I effectively KO'd the API and started getting 500s.

This script doesn't actually archive everything because it doesn't loop. That's left as an exercise for the reader for now.

[!meta title="Abraham & Torok"]]

I've reformatted this post as LaTeX, as it was getting too long. FIXME link the PDF.

This blog is powered by coffee and ikiwiki.